In-Depth

Big Data in the Cloud

Microsoft has cooked up a feast of value-added big data cloud apps featuring Apache Hadoop, MapReduce, Hive and Pig, as well as free apps and utilities for numerical analysis, publishing data sets, data encryption, uploading files to SQL Azure and blobs.

Competition is heating up for Platform as a Service (PaaS) providers such as Microsoft Windows Azure, Google App Engine, VMware Cloud Foundry and Salesforce.com Heroku, but cutting compute and storage charges no longer increases PaaS market share. So traditional Infrastructure as a Service (IaaS) vendors, led by Amazon Web Services (AWS) LLC, are encroaching on PaaS providers by adding new features to abstract cloud computing functions that formerly required provisioning by users. For example, AWS introduced Elastic MapReduce (EMR) with Apache Hive for big data analytics in April 2009. In October 2009, Amazon added a Relational Database Services (RDS) beta to its bag of cloud tricks to compete with SQL Azure.

Microsoft finally countered with a multipronged Apache Hadoop on Windows Azure preview in December 2011, aided by Hadoop consultants from Hortonworks Inc., a Yahoo! Inc. spin-off. Microsoft also intends to enter the highly competitive IaaS market; a breakout session at the Microsoft Worldwide Partner Conference 2012 will unveil Windows Azure IaaS for hybrid and public clouds. In late 2011, Microsoft began leveraging its technical depth in business intelligence (BI) and data management with free previews of a wide variety of value-added Software as a Service (SaaS) add-ins for Windows Azure and SQL Azure (see Table 1).

| Codename |

Description |

Link to Tutorial |

| Social Analytics |

Summarizes big data from millions of tweets and other unstructured social data provided by the "Social Analytics" team |

bit.ly/Kluwd1 |

| Data Transfer |

Moves comma-separated value (CSV) and other structured data to SQL Azure or Windows Azure blobs |

bit.ly/IC1DJp |

| Data Hub |

Enables data mavens to establish private data markets that run in Windows Azure |

bit.ly/IjRCE0 |

| Cloud Numerics |

Supports developers who use Visual Studio to analyze distributed arrays of numeric data with Windows High-Performance Clusters (HPCs) in the cloud or on-premises |

bit.ly/IccY3o |

| Data Explorer |

Provides a UI to quickly mash up big data from various sources and publish the mashup to |

bit.ly/IMaOIN |

| Trust Services |

Enables programmatically encrypting Windows Azure and SQL Azure data |

bit.ly/IxJfqL |

| SQL Azure Security Services |

Enables assessing the security state of one or all of the databases on a SQL Azure server. |

bit.ly/IxJ0M8 |

| Austin |

Helps developers process StreamInsight data in Windows Azure |

bit.ly/LTL5QP |

Table 1

The SQL Azure Labs team and the StreamInsight unit have published no-charge previews of several experimental SaaS apps and utilities for Windows Azure and SQL Azure. The Labs team characterizes these offerings as "concept ideas and prototypes," and states that they are "experiments with no current plans to be included in a product and are not production quality."

|

In this article, I'll describe how the Microsoft Hadoop on Windows Azure project eases big data analytics for data-oriented developers and provide brief summaries of free SaaS previews that aid developers in deploying their apps to public and private clouds. (Only a couple require a fee for the Windows Azure resources they consume.) I'll also include instructions for obtaining invitations for the previews, as well as links to tutorials and source code for some of them. These SaaS previews demonstrate to independent software vendors (ISVs) the ease of migrating conventional, earth-bound apps to SaaS in the Windows Azure cloud.

Analyze Big Data with Apache Hadoop on Windows Azure

Apache Hadoop, MapReduce, Hive and the Pig Latin language have a near-monopoly on the mindshare of NoSQL proponents, as well as big data curators and analysts. The SQL Server team announced Project Isotope -- which includes an Apache Hadoop on Windows Azure service, more commonly called HadoopOnAzure, and a distribution for on-premises Windows Server 2008 R2 -- at the Professional Association for SQL Server Summit in October 2011.

At press time, HadoopOnAzure was a community technology preview (CTP) version released to a limited number of testers starting in December 2011; general availability is expected in mid-2012. To obtain an invitation to the CTP, fill out the Microsoft Connect survey (this requires a Windows Live ID, as do several other survey and registration pages discussed here). A preview of the on-premises version is expected in mid-2012.

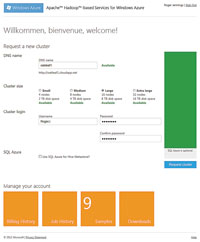

The CTP includes an EMR Portal, which creates temporary, on-demand clusters in Windows Azure provisioned at the Microsoft North Central U.S. (Chicago) datacenter. When you open the HadoopOnAzure site's landing page at hadooponazure.com, click "Sign in" and provide the Windows Live ID you used to request the invitation. Request a cluster by completing the provided form.

Clicking the "Request cluster" button starts the provisioning of a no-charge Hadoop Distributed File System (HDFS) cluster, which takes a few minutes and lasts for 24 hours. If you don't renew the cluster during its last six hours of life, the system will reclaim the resources for other users.

When the process completes, the page provides tiles for numerous tasks:

- Creating a new MapReduce job

- Writing interactive JavaScript or Hive queries in the Interactive Console

- Setting up a Remote Desktop session with the cluster on Windows Azure

- Opening a TCP port for uploading data with FTPS, the Hive ODBC for Microsoft Excel, or both

- Managing the cluster by setting up data import from the Windows Azure Marketplace DataMarket; a Windows Azure Storage Vault, or ASV (Windows Azure blob storage account); or an Amazon S3 account

Hive Talkin'

Data-oriented .NET developers will probably be more comfortable with using the Hive query language, HiveQL (the syntax of which closely resembles ANSI SQL-92), than writing MapReduce jobs in Java or the EMR interactive JavaScript Console.

HiveQL generates a set of MapReduce jobs to make using filters, joins and aggregates easier, but is usually slower in execution than a relational database that can handle data of similar size. You can download an Apache Sqoop-based Microsoft SQL Server Connector here.

Sqoop is an open source tool designed for efficiently transferring bulk data between Hadoop and structured data stores such as relational databases. The Hadoop Connector uses MapReduce programs to provide bidirectional data transfer between HDFS or Hive tables and SQL Server 2008 R2 or later.

The connector adds support for SQL Server nchar and nvarchar data types to Hive. But enabling Sqoop requires downloading and installing the Cloudera Hadoop distribution and Sqoop implementation, as well as the Microsoft Java Database Connectivity (JDBC) driver. Therefore, most developers will use the built-in Windows Azure ASV or Amazon S3 blob connectors whenever possible. An important advantage of externally persisted data is that you don't lose it when your free Hadoop cluster times out. An illustrated tutorial describes how to work with ASV files in the Hadoop Command Shell via Remote Desktop Protocol, the Interactive JavaScript Console and the Interactive Hive Console.

Creating a Hive table from an external data source from a tab-delimited text blob requires executing a CREATE EXTERNAL TABLE instruction in the Interactive Hive Console to define the table's schema. Executing this HiveQL statement creates a table of U.S. certificated air carrier flight departure and arrival delays from tab-delimited text files contained in a folder of a Windows Azure blob:

CREATE EXTERNAL TABLE flightdata_asv (

year INT,

month INT,

day INT,

carrier STRING,

origin STRING,

dest STRING,

depdelay INT,

arrdelay INT

)

COMMENT 'FAA on-time data'

ROW FORMAT DELIMITED FIELDS TERMINATED by '9'

STORED AS TEXTFILE

LOCATION 'asv://aircarrier/flightdata';

HiveQL doesn't have a native date data format, so dates are specified by year, month and day values, which the original data from the U.S. Federal Aviation Administration (FAA) includes. The FIELDS TERMINATED by '9' argument specifies a tab (ANSI character 9) delimiter; substitute "," for CSV files.

Type or paste statements in a text box below the read-only display pane and click the Evaluate button to execute them (see Figure 1). The statement inserts rows from every file in the LOCATION 'asv://containername/foldername' argument; asv represents the Windows Azure storage account you specified when setting up Hive data input. Data import from an ASV data source in the same datacenter as your Hadoop clusters (North Central U.S.) is quick; the tutorial described earlier reported that importing about 3 million rows took only about 74 seconds.

[Click on image for larger view.] |

| Figure 1. Complete the request for a new cluster of a size suitable for your source data in this Metro-centric Elastic MapReduce UI. The DNS name must be globally unique within the Widows Azure compute infrastructure. The number of sample Hadoop applications increased from four to nine in March 2012. |

The Hive ODBC driver and Excel Hive add-in combine to enable management types -- who probably are more familiar with worksheets than writing SQL queries -- to visualize data from HiveQL SELECT query result sets. As noted earlier, users must download and install the Hive ODBC driver and Excel add-in of the correct bit-ness on their local computers.

The add-in inserts a Hive Data group with a Hive Pane icon in the Excel Data tab. Clicking the icon opens a task pane UI with controls to select a Hive Connection, Hive Objects (tables or views), Columns, Filter Criteria, Aggregate Grouping, Ordering or Row Limiting. Alternatively, you can type your own HiveQL statement. Clicking "Execute Query" executes the composed or custom query and returns the result set to the worksheet, as shown in Figure 2.

[Click on image for larger view.] |

| Figure 2. The main Elastic MapReduce page provides tiles for performing MapReduce tasks, as well as managing the cluster and your account. The Billing History page is empty because resources for testing HadoopOnAzure are free. Clicking Downloads opens a page from which you can obtain 32- or 64-bit versions of the developer preview for the Hive ODBC driver and its Excel add-in, as well as a PDF or Microsoft Word version of a How-To and FAQ for the driver. |

Visualizing the flight delay data in an Excel bar graph with carrier codes on the ordinate (x-axis) and average departure delay hours on the abscissa (y-axis) only requires selecting the appropriate data range and choosing Insert, Charts and Column, and formatting the chart and axis titles (see Figure 3). A tutorial describes in detail how to deploy and use the Hive ODBC driver and Excel add-in. You can download the source data files from my public Windows Live SkyDrive folder, sdrv.ms/J2FULk.

[Click on image for larger view.] |

| Figure 3. The Elastic MapReduce Interactive Hive page, shown here in a multiple exposure, persists Windows Azure Storage Vault connection metadata and lets you choose the data source you want in the Tables list. Selecting a table connection displays its fields in a Columns list. Clicking either ">>" button inserts the selection in the writable text box below the display pane. |